Hey Guys!

Let’s have some introduction about VoiceBot first. Understand the use cases and types of it below. Then we can gradually move deep towards actual code and implementation.

What is VoiceBot

The Voice bots are virtual assistants that can answer phone calls, just like humans answer the call. Such assistants can be trained enough so that they are able to understand the human queries and can talk with them. Whenever you call into the call centre, generally the Human receives the call and answers your queries. But with the help of voice bots, customer calls can be connected to such smart and intelligent voice bots to handle some amount of routine problems and FAQs.

In the market there are a lot of giants providing voice assistant services like Twilio Autopilot, Amazon Lex and many more. But these providers can integrate voice bot with US numbers and all other countries seamlessly. Now what about the Indian SIP or PRI numbers, how to integrate them to voice bots. We can not go and purchase India toll free numbers from Twilio like platforms as those are too costly. So Indian DID numbers are not able to take advantage of those services.

For this we can make voice bot from scratch on Asterisk like open source PBX and integrate India SIP trunk line with it. So, this way Indian numbers can also be connected with custom developed voice bots. And yes obviously it will be cheaper in use as you can connect Indian SIP trunks with it.

Types of Voice bot

Generally we can classify voice bots into two main categories.

1. Conversational Voice Bot

These are very simple bots that can answer the questions asked by users. These answers will be simple static responses from the bots, just like informative messages.

Example:

User: What internet plans are you providing?

Bot: Yes, we have these three highly used internet plans. The first one is 60 Mbps at 6000 per year. The second one is 80 Mbps at 8000 per year and third one is 100 Mbps at 10000 per year.

2. Advanced Voice Bot

These are medium to highly complex bots that can lookup databases, integrate with external APIs and can generate dynamic custom responses to make them feel more like humans.

Example:

Bot: Dear [Ankit] welcome to our company. How can I help you?

User: Can you please check my last ticket status?

Bot: Yes, [Ankit] your last registered issue is in progress and will be resolved by today max by 7 pm.

Bot: Welcome to xyz Bank. How can I help you?

User: I want to know about my balance

Bot: Sure, for that we need to verify your account. Please tell me the last four digits of your account number.

User: Ya, it is 1415

Bot: Okay, we have sent you an OTP of six digits to your registered mobile number. Please tell the OTP you received.

User: The OTP is 121912

Bot: Great, you have successfully verified your account and your balance is 20,000 INR

Why VoiceBot

The voice bots can work 24*7 for the customers and can solve the queries of customers. The call centers are going to adopt this technology to reduce the number of human agents and they can run with minimum agents. Most important thing about such AI bots is that it can be trained on user inputs and thus day by day it becomes more intelligent to answer the customer’s context. It can understand in a better way and answer the call effectively, so the customer will have a more personalized experience.

There are a number of use cases of the voice bots. One can make it for the appointment booking system, order booking system, as a FAQs answering machine etc.

Voice bot Architecture

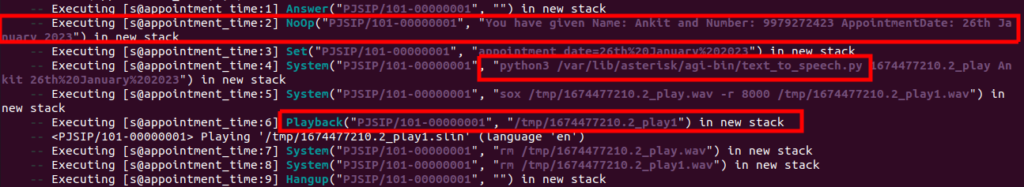

I have worked on Asterisk and Rasa to make voice bots from scratch. Rasa is a chatbot framework that can be used for user’s intent classification and entity extraction during the conversation. Here I am going to share below architecture design for voice bot.

Above, I have used MS Azure for Speech to text and text to speech services. We can use any other provider also for these services. By seeing the image below you can understand Voice bot include following elements:

1.Asterisk – For voice call processing

2. STT/TTS – To understand user input we need to do STT and to reply to users we need TTS.

3. Rasa – NLU and NLP units are used here from Rasa.

It understands user input in text format. It can do intent classification (user talks in which context) and entity extraction (the useful information from user input, E.g my number is 997927xxxx here number is the entity which we are interested to capture)

Let’s jump into the actual code!!!

Here is the sample code for the voice bot processing, below is the sample of extensions.conf file.

[outgoing]

exten => 111,1,Answer()

same => n,Set(exten=${EXTEN})

same => n,MixMonitor(,r(/tmp/${UNIQUEID}.wav)i(mix_mon_id))

same => n,BackGroundDetect(welcome-8khz,1000,500)

same => n,BackGroundDetect(silence/5,1000,500)

same => n,BackGroundDetect(did_not_understand-8khz,1000,500)

same => n,BackGroundDetect(silence/5,1000,500)

same => n,BackGroundDetect(did_not_understand-8khz,1000,500)

same => n,WaitExten(2)

exten => talk,1,Noop(Talk detection completed….)

same => n,StopMixMonitor(${mix_mon_id})

same => n,System(sox /tmp/${UNIQUEID}.wav /tmp/${UNIQUEID}-1.wav silence 1 0.1 1%)

same => n,AGI(speech_to_text.py,/tmp/${UNIQUEID}-1)

same => n,Noop(The Intent : ${intent} EntityName : ${entity_name} EntityValue : ${entity_value})

same => n,System(rm /tmp/${UNIQUEID}.wav)

same => n,System(rm /tmp/${UNIQUEID}-1.wav)

same => n,Gotoif($[${LEN(${intent})}>0]?goto_intent:goto_again)

same => n(goto_intent),Goto(${intent},s,1)

same => n(goto_again),Goto(${CONTEXT},${exten},1)

exten => t,1,Noop(Time out happened…)

same => n,System(rm /tmp/${UNIQUEID}.wav)

same => n,Goto(${CONTEXT},${exten},1)

same => n,Hangup()

In the above code, let’s understand things one by one. Following main applications are used in the above sample code.

MixMonitor: This app will record the human voice. (Remember the customer voice only as we have given the r option)

BackGroundDetect: This app will playback the prompt in background and can be interrupted by human voice input

WaitExten: Waits for user input or timeout. Suppose, human talk is detected then it will jump to talk extension or it can timeout on the t extension.

When the talk is detected we stop the recording (StopMixMonitor) of human voice and clean the data (user input) with sox command. Then we do speech to text with the AGI speech_to_text.py. After STT we do a query to the Rasa server with the generated text data. The Rasa server will classify the intent of the human input and extract any entity if possible.

After successfully detection of intent we delete the temp wav files. Then the call is moved to detected intent for further processing. We can create all the contexts as per the intent name into dialplan. For example [book_appointment] [my_name] [mobile_number] [appointment_time] etc. These are the intent names detected from Rasa and based on this detected intent name we can create context in the dialplan to process particular task.

If the intent is not detected then we move the call to the same context again to repeat the question. You can observe the goto_intent and goto_again labels in the dialplan.

In the case of timeout also, we move the call to the same context after deleting the wav file, to ask the same question again.

I have not shown the Rasa installation and configuration part as we are focusing on mainly Asterisk related stuff here. For installing the Rasa you can follow link, https://learning.rasa.com/installation/ubuntu/

The full code for the project is available at, https://github.com/ankitjayswal87/VoiceBot

This Github repository contains code for extensions.conf, speech to text and text to speech AGIs, voice prompts, rasa-project folder. With these available code you can play and inter relate the things. I will cover later how we can query Rasa NLU to classify intent and entity.

Please visit link, https://telephonyhub.in/2023/02/10/how-to-query-rasa-server-via-curl-request/ to see how we can query Rasa server via API.

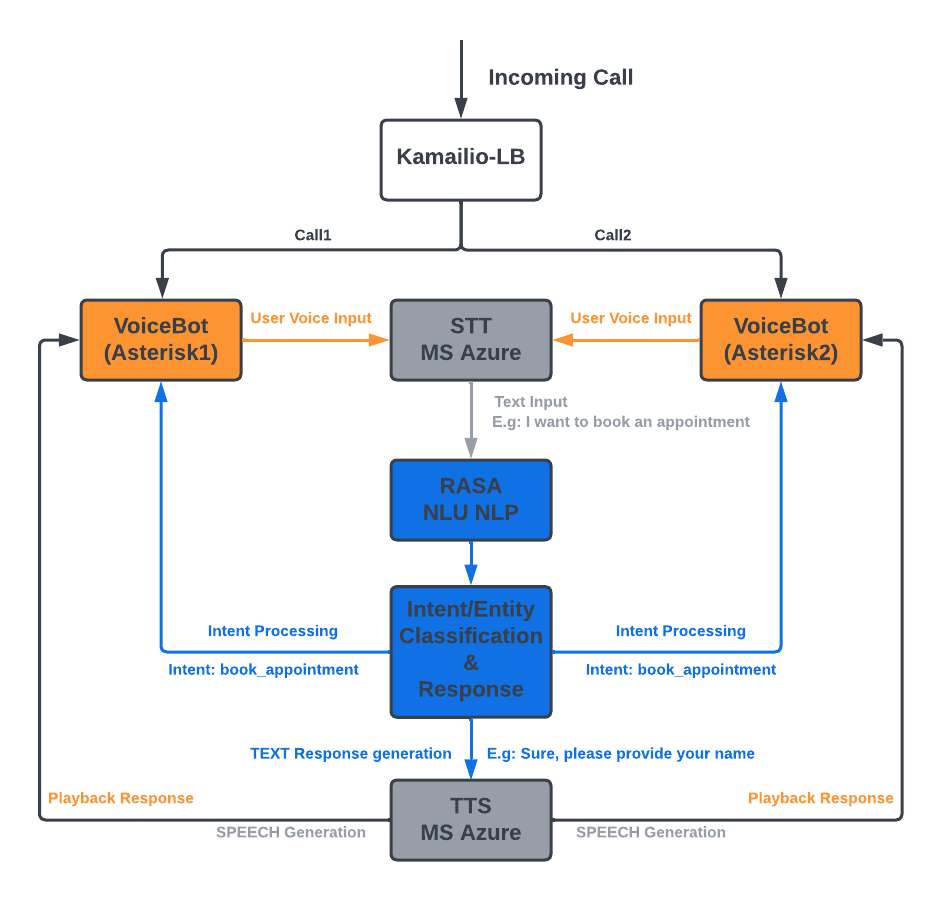

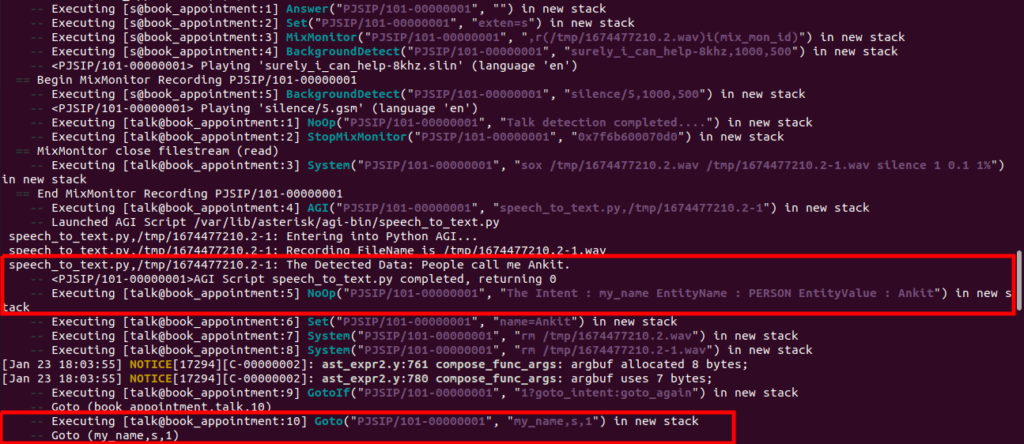

I am sharing here Asterisk CLI logs for the simple Appointment booking voice bot demo. It is for showing the intent classification and entity extraction (entities: name, number, date etc.). Intent name will be the name of context, so as soon as the intent is classified, call progress into particular intent’s context. You can observe dialplan logs below that will be supported by extension.conf file mentioned in above Github code. You can observe the code into Github and play with it. Note here I used Microsoft Azure for STT and TTS service so you will require that account and necessary credentials to input into constants.py file.

So, when I dialed 111 extension number from my registered soft-phone, the call entered into the dialplan and welcome announcement is playing.

User said “I want to book an appointment.”

Bot is asking for name now,

User said “People call me Ankit.”

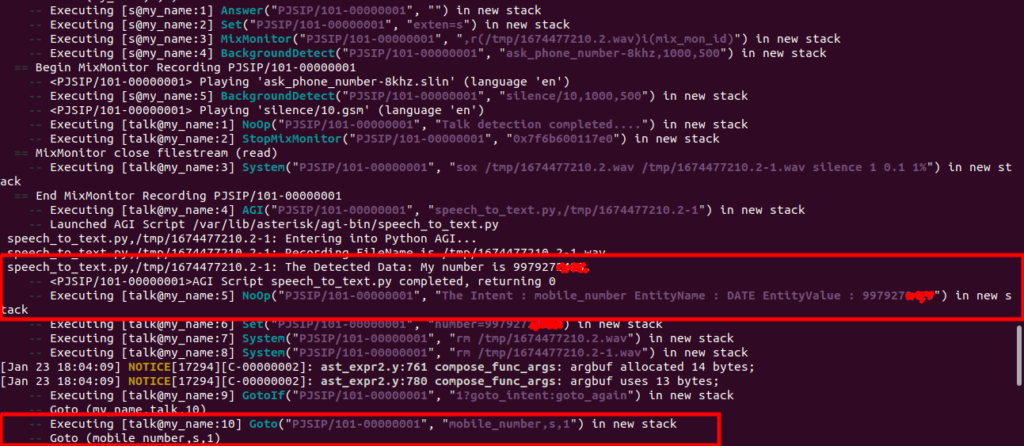

Now, bot is asking for contact number

User said “My number is 997927xxxx.”

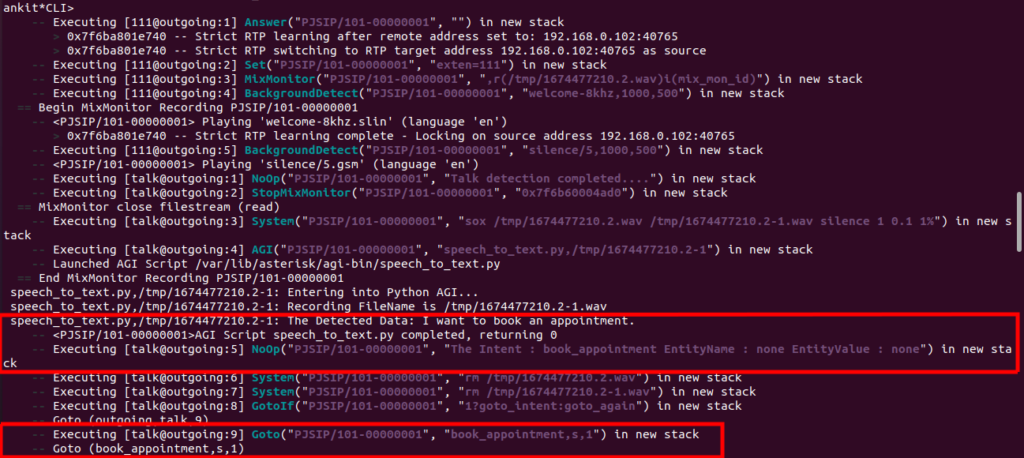

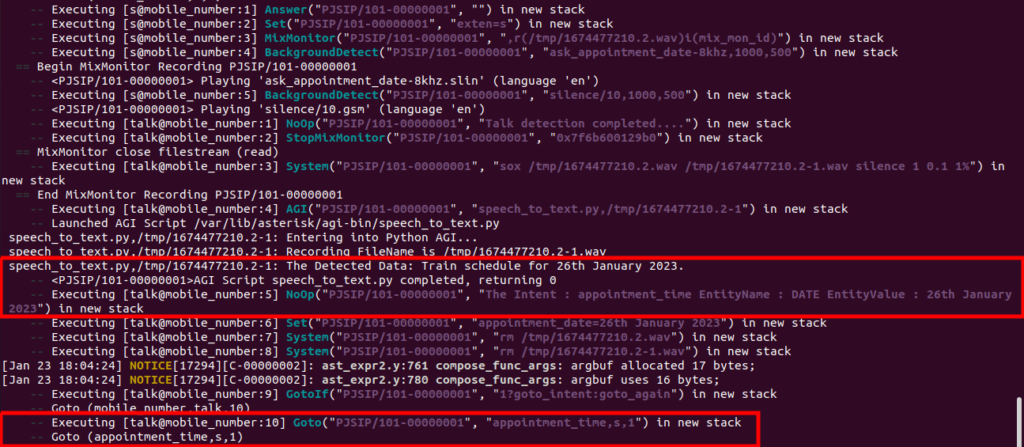

Now the bot is asking for appointment date

User said “Train schedule for 26th January 2023.”

Call went to final appointment_time context and after doing text to speech it is dynamically playing the final response.

Bot spoke “Thank you, Ankit your appointment has been booked now on 26th January 2023 Your booking id is 3 5 4 7”